Automatic, secure, distributed, with transitive connections (that is, forwarding messages when there is no direct access between subscribers), without a single point of failure, peer-to-peer, time-tested, low resource consumption, and a full-mesh VPN network with the ability to “punch” NAT – is it possible?

Article published on Dev.To

Overview of Tinc

Unfortunately, Tinc VPN has no such big community like Open VPN or similar solutions, however, there are some good tutorials:

- How To Install Tinc and Set Up a Basic VPN on Ubuntu 14.04 by Digital Ocean

- How to Set up tinc, a Peer-to-Peer VPN by Linode

The Tinc man is always a good source of truth.

Tinc VPN (from the official site) is a service (the tincd daemon) that makes a private network by tunneling and encrypting traffic between nodes. Source code is open and available under the GPL2 license. Like a classic (OpenVPN) solutions, the virtual network created will be available at the IP level (OSI 3) which generally means that making changes to the applications is not required.

Key features:

- encryption, authentication and compression of traffic;

- fully automatic full-mesh solution, which includes building connections to network nodes in a peer-to-peer mode or, if this is not applicable, forwarding messages between intermediate hosts;

- “punching” NAT;

- the ability to connect isolated networks at the ethernet level (virtual switch);

- Support for multiple operating systems: Linux, FreeBSD, OS X, Solaris, Windows, etc.

There are two branches of tinc development: 1.0.x (in almost all repositories) and 1.1 (eternal beta). In this article, only version 1.0.x was used.

From my point of view, some of the strongest features of Tinc is ability to forward messages over peers when direct connection is not possible. Routing tables are built automatically. Even nodes without a public address can become a relay server.

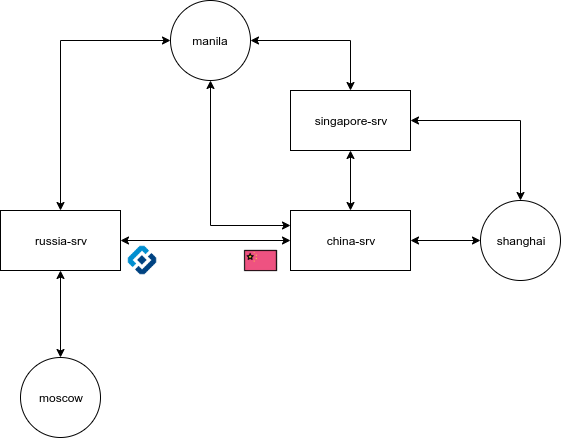

Consider a situation with three servers (China, Russia, Singapore) and three clients (Russia, China and the Philippines):

- Servers have a public addresses, clients are behind a NAT;

- Due to Russian censorship rules, all the other ISPs were eventually blocked except the “friendly” China (unfortunately, not so unrealistic);

- the network border of China <-> Russia is unstable and may fall (due to both countries’ censorship rules);

- connections to Singapore are pretty stable (from personal experience);

- Manila (Philippines) is not a threat to anyone, and therefore is allowed by everyone (due to the distance from everyone and everything).

Using the traffic exchange between Shanghai and Moscow as an example, consider the following Tinc scenarios (approximately):

- Normal situation: Moscow <-> russia-srv <-> china-srv <-> Shanghai

- Due to censorship rules, connection to China has been closed: Moscow <-> russia-srv <-> Manila <-> Singapore <-> Shanghai

- (after 2) in case of server failure in Singapore, traffic is transferred to the server in China and vice versa.

Whenever possible, Tinc attempts to establish a direct connection between the two nodes behind NAT by punching.

Brief introduction to Tinc configuration

Tinc is positioned as an easy-to-configure service, however, something went wrong - to create a new node, minimal requirements are:

- describe the general configuration of the node (type, name) (

tinc.conf); - describe the node configuration file (served subnets, public addresses) (

hosts /); - create a key;

- create a script that sets the host address and related parameters (

tinc-up); - It is advisable to create a script that clears the created parameters after stopping (

tinc-down).

In addition to described above, when connecting to an existing network, you must obtain the existing host keys and provide your own.

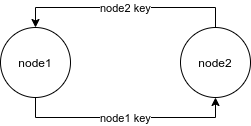

Ie: for the second node

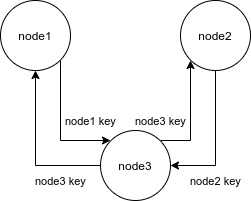

For the third node

By using two-way synchronization (for example, unison), the number of additional operations increases to N, where N is the number of public nodes.

With respect to the developers of Tinc - to join to the existing network, you just need to exchange keys with only one of the nodes (bootnode). After starting the service and connecting to the participant, tinc will get the network topology and will be able to work with all members.

However, in case of the boot host has become unavailable and then tinc was restarted, then there is no way to connect to the virtual network.

Moreover, the enormous possibilities of tinc, together with the academic documentation of the project (well described, but few examples), provide an extensive field for making mistakes.

Reasons to create tinc-boot

Generalizing the problems described above and then formulating them as tasks, we got the following as necessities:

- simplify the process of creating a new node;

- potentially, should be enough to give a common user (“Power User” in Windows terms) one small shell command to create a new node and join the network (I keep in mind something like this to connect with my grandad - former tech guy);

- provide automatic distribution of keys between all active nodes;

- provide a simplified procedure for exchanging keys between the bootnode and the new client.

bootnode - node with a public address;

Due to task 2 above, we can guarantee that after the key exchange between the bootnode and the new node and after establishing a connection to the network, the new key will be distributed automatically.

A tinc-boot was made to solve said tasks.

tinc-boot is a self-contained (except tinc) open source application that provides:

- simple creation of a new node;

- automatic connection to an existing network;

- setting the majority of parameters by default;

- distribution of keys between nodes.

Architecture

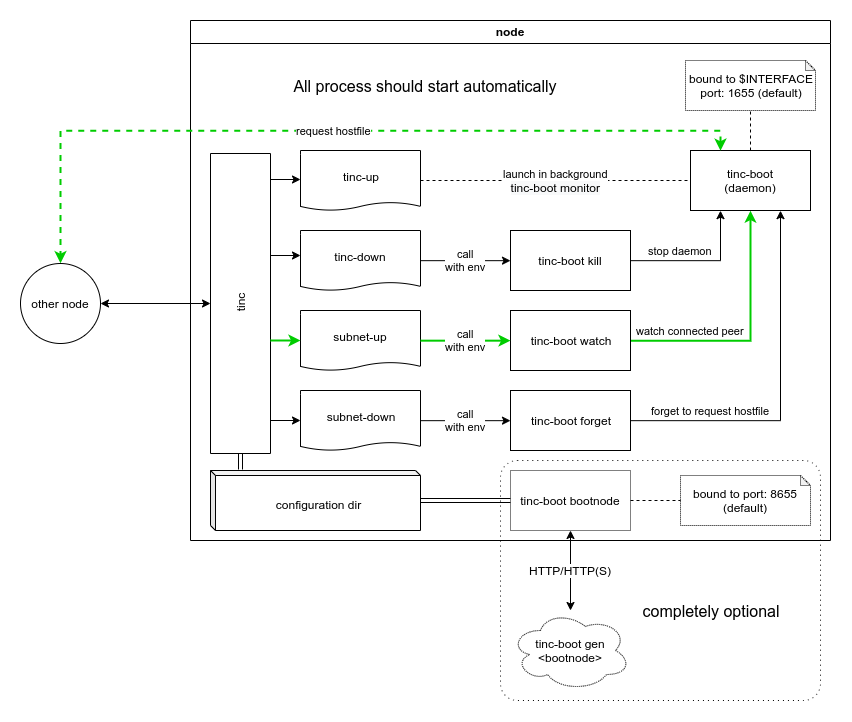

The tinc-boot executable file contains four components: a bootnode server, a key distribution management server and RPC management commands for it, as well as a node generation module.

Module: config generation

The node generation module (tinc-boot gen) creates all the necessary files for tinc to run successfully.

Simplified, its algorithm can be described as follows:

- Define the host name, network, IP parameters, port, subnet mask, etc.

- Normalize them (tinc has a limit on some values) and create the missing ones

- Check parameters

- If necessary - install

tinc-bootto the system (could be disabled) - Create scripts

tinc-up,tinc-down,subnet-up,subnet-down - Create a configuration file

tinc.conf - Create a host file

hosts / - Perform key generation (4096 bits)

- Exchange keys with bootnode

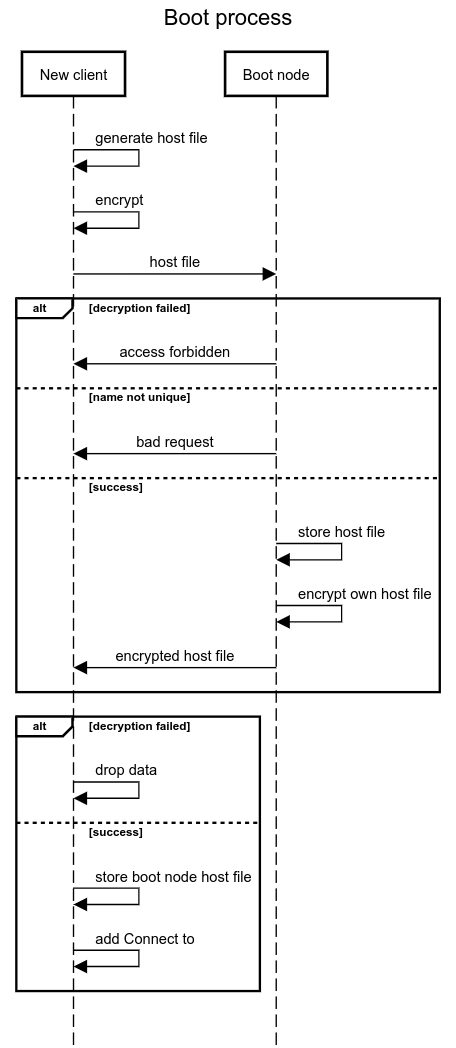

- Encrypt and sign own host file with a public key, a random initialization vector (nounce) and host name using xchacha20poly1305, where the encryption key is the result of the sha256 function from the token

- Send data via HTTP/HTTPS protocol to bootnode

- Receive answer and the header

X-Nodecontaining the name of the boot node, decrypt using the original nounce and the same algorithm - If successful, save the received key in

hosts /and add theConnectToentry to the configuration file (ie a recommendation where to connect during tinc start) - Otherwise, use the address of the boot node next in the list and repeat from step 2

- Print recommendations for starting the service

Conversion via SHA-256 is used only to normalize the key to 32 bytes

For the very first node (that is, when there is nothing to specify as the boot address), step 9 is skipped. The --standalone flag.

Example 1 - creating the first public node

Assume that the public address is 1.2.3.4

sudo tinc-boot gen --standalone -a 1.2.3.4

- the

-aflag allows you to specify publicly accessible addresses

Example 2 - adding a non-public node to the network

The boot node will be taken from the example above. The host must have tinc-boot bootnode running (to be described later).

sudo tinc-boot gen --token "<MY TOKEN>" http://1.2.3.4:8655

- the

--tokenflag sets the authorization token

Bootstrap module

The boot module (tinc-boot bootnode) runs an HTTP server and serves as API for the key exchange with new clients.

By default it uses port 8655.

Simplified, the algorithm can be described as follows:

- Accept a request from a client

- Decrypt and verify the request using xchacha20poly1305, using the initialization vector (nounce) passed during the request, and where the encryption key is the result of the sha256 function from the token

- Check name

- Save the file if there is no file with the same name yet

- Encrypt and sign your own host file and name using the algorithm described above

- Return to paragraph 1

Together, the primary key exchange process is as follows:

Example 1 - start bootnode

It is assumed that the first initialization of the node already was done (tinc-boot gen).

tinc-boot bootnode --token "MY TOKEN"

- the

--tokenflag sets the authorization token. It should be the same for clients connecting to the host.

Example 2 - start bootnode as a system service

tinc-boot bootnode --service --token "MY TOKEN"

- the

--serviceflag means to create a systemd service (by default, for the example,tinc-boot-dnet.service) - the

--tokenflag sets the authorization token. It should be the same for clients connecting to the host.

Module: key distribution

The key distribution module (tinc-boot monitor) raises an HTTP server with an API for exchanging keys with other nodes inside the VPN. It binds to the address issued by the network (the default port is 1655, so there will be no conflict with several instances since each network has (or must have) its own address)

There is no need to manually work on the module since it starts and works automatically.

The module starts automatically when the network is up (in the tinc-up script) and automatically stops when it’s down (in the tinc-down script).

Supports operations:

GET /- give your node filePOST /rpc/watch?Node=<>&subnet=<>- fetch a file from another node, assuming there is a similar service running on it. By default, attempts are timed out at 10 seconds, every 30 seconds until success or cancellation.POST /rpc/forget?Node=<>- leave attempts (if any) to fetch the file from another nodePOST /rpc/kill- terminates the service

In addition, every minute (by default) and when a new configuration file is received, indexing of the saved nodes is made for new public (with public IP) nodes. When nodes with the Address flag are detected, an entry is added to the tinc.conf configuration file to recommend connection during restart.

Module: key distribution control

Commands for requesting (tinc-boot watch) and canceling the request ( tinc-boot forget) of the configuration file from other nodes are executed automatically upon detection of a new node (subnet-up script) and stop (subnet-down script) respectively.

In the process of stopping the service, the tinc-down script is executed in which the tinc-boot kill command stops the key distribution module.

To summarize

This utility was created under the influence of cognitive dissonance between the genius of Tinc developers and the linearly growing complexity of setting up new nodes.

The main ideas in the development process were:

- if something can be automated, it must be automated;

- default values should cover at least 80% of use (Pareto law);

- any value can be redefined using flags as well as using environment variables (12-factors);

- the utility should help, and not cause a desire to call all the punishment of heaven on the creator;

- using an authorization token for initial initialization is an obvious risk, however, to the extent possible, it was minimized due to total cryptography and authentication (even the host name in the response header cannot be replaced).

During development, I actively tested on real servers and clients (the picture from the description of tinc above is taken from real life). Now the system works flawlessly.

The application code is written in GO and open under the MPL 2.0 license. The license (free translation) allows commercial (if suddenly someone needs) use without opening the source product. The only requirement is that the changes must be transferred to the project.

Pool requests are welcome.